Une version francaise de cet article est disponible ici .

The ranking of players in general, and especially of chess players, has

been studied for almost 80 years.

There were many different systems

until 1970 such as the Ingo system (1948) designed by

Anton Hoesslinger and used by the German federation, the Harkness system

(1956) designed by Kenneth Harkness and used by

the USCF federation,

and the English system designed by Richard Clarke. All these systems, which

were mostly ``rule of thumb'' systems, were

replaced in almost every chess federation by the ELO system around

1970. The ELO system, the first to have a sound statistical basis,

was designed by Arpad Elo from the assumption

that the performance of a player in a game is a

normally distributed

random variable. Later on, different systems trying to refine the

ELO system were proposed, such as the chessmetrics system designed by

Jeff Sonas, or the Glicko system designed by Mark

Glickman, which is

used on many online playing sites. All these systems share, however, a

similar goal: to infer a ranking from the results of the games played

and not from the moves played.

Thus it is possible to win points and

enhance a ranking in one game even if you don't play well, as long as

your opponent is making more mistakes. Statistically, this bias should

disappear with a large number of games. However, the ELO system (and all

related systems) are only efficient to compare players playing at the

same period, because points are won or lost in head to head

matches. But it is extremely difficult to compare the ELO rankings of

2017 to the ELO ranking of 1970, a problem known as "drifting through

years". Thus there is a large literature (such as Raymond Keene and

Nathan Divinsky: Warriors of the Mind, A Quest for

the Supreme Genius of the Chess Board), which discusses which player

was the best ever, but all their conclusions remain highly debatable.

In 2006, Guid and Bratko (Computer analysis of World Chess Champions, ICGA journal, 29-2, 2006) did remarkable and pioneering work, advocating for the idea of ranking players by analyzing with a computer program the moves made and by trying to assess the quality of these moves. However, their work was criticized on different grounds; they used a chess program that in 2006 had an ELO rating of only 2700 and moreover, they lacked computing power and used this chess program at a limited depth, and the sample analyzed was small. But the main criticism is more fundamental: how can you say which is the best player between a player who is playing the exact right move most of the time, but sometimes makes serious blunders and a player who makes good moves (but not the best moves) all the time and almost never blunders?

In 2012 Diogo Ferreira (Determining the strength of chess players based on actual play, ICGA journal, 35-1, 2012) refined the idea. He computed the difference between the evaluation of the move played and the evaluation of the best move found by the computer and interpreted this difference as a distribution function. By computing the convolution of the distribution of two different players, he was able to compute an expected value of the result of the game between the two players. However, his work suffered also from a lack of computing power, and presented probably a small methodological inaccuracy regarding the interpretation of the result of the convolution of distributions. But there remained a main central difficulty, which is the problem of context: a small error has almost no significance when made in a position that is already seriously unbalanced in favor of one of the two players, while it might be a killing move in a tight game; this was a problem that Ferreira's method could not address.

The article (available below), published in the ICGA journal (ICGA

Journal, 39-1, 2017) extensively reviews the ranking methods,

explains their strengths and their weaknesses, and evaluates them on a

very large corpus of games: 26000 games (all games played by World

Champions from Wilhelm Steinitz to Marcus Carlsen), evaluated by the

best available program (Stockfish that, with the setting used, has an

ELO strength of around 3100 or 3200 ELO points), at regular tournament

time controls (62000 CPU hours were needed on the OSIRIM cluster

of the Institut de Recherche en Informatique de Toulouse).

It also demonstrates that, by still using a computer program to evaluate

moves, all the problems

mentioned above can be solved by interpreting chess as a Markovian

process. It is thus possible using this last method and some linear

algebra, to have a ranking that is more reliable and

can compare players through the years

(there are also some other interesting points that arise from the

statistical analysis performed on a large database of chess games. For

example, it appears that chess players are performing better when

playing with white pieces than when playing with black pieces,

probably for psychological reason; playing with black probably

encourages more risk taking, and thus more mistakes, as it

is usually assumed that black has a small disadvantage in chess).

The question usually asked at this point by most people is: "Then, who is/was the best?" Well, as for simplest questions, there is no trivial answer. Distribution or Markovian methods do not provide rankings, they just provide a way to rank a pair of players. However, a simple (and partial) answer is provided in the following table, which is extracted from the article. Each cell is the percentage of the expected result of a game between the two corresponding players, taken in their best year(Carlsen: 2013, Kramnik: 1999, Fischer: 1971, Kasparov: 2001, Anand: 2008, Khalifman: 2010, Smyslov: 1983, Petrosian: 1962, Karpov: 1988, Kasimdzhanov: 2011, Botvinnik: 1945, Ponomariov: 2011, Lasker: 1907, Spassky: 1970, Topalov: 2008, Capablanca: 1928, Euwe: 1941, Tal: 1981, Alekhine: 1922, Steinitz: 1894.). The table is not symmetric as it is not the same thing to play first or to play second. The left column is more or less a ranking of the 20 World Chess Champions. However, to understand all the ins and outs of the method, you should read the complete article.

Ca Kr Fi Ka An Kh Sm Pe Kp Ks Bo Po La Sp To Ca Ta Eu Al St Carlsen 52 54 54 57 58 57 58 56 60 61 59 60 61 61 64 66 69 70 82 Kramnik 49 52 52 55 56 56 57 55 59 60 58 60 60 60 63 65 68 70 83 Fischer 47 49 51 53 57 56 57 56 59 60 60 61 61 62 64 68 70 73 85 Kasparov 47 49 50 53 54 54 54 53 57 58 56 56 58 58 60 62 66 68 82 Anand 44 46 48 48 54 52 53 53 57 56 57 57 59 59 62 64 69 71 86 Khalifman 43 45 44 47 47 50 51 52 53 54 55 55 56 56 60 62 64 67 79 Smyslov 43 45 45 47 49 51 50 51 53 55 54 54 54 55 59 63 64 68 82 Petrosian 43 44 45 47 49 50 51 52 53 54 54 55 55 56 59 63 63 67 80 Karpov 44 46 45 48 48 49 50 49 51 52 52 52 52 52 56 58 60 63 76 Kasimdzhanov 41 43 42 45 45 48 48 48 50 52 52 52 54 53 56 60 62 65 80 Botvinnik 40 41 41 44 45 48 46 48 49 49 50 54 52 52 56 60 60 64 80 Ponomariov 42 43 41 45 44 47 47 47 49 49 51 51 52 52 55 58 59 62 77 Lasker 41 41 40 45 44 46 47 46 49 49 48 50 51 50 54 58 59 63 78 Spassky 40 41 40 43 42 45 47 46 48 47 49 49 50 51 53 58 57 61 75 Topalov 40 41 39 44 42 45 46 45 49 48 49 49 50 51 54 57 57 61 75 Capablanca 37 38 37 41 39 42 42 42 45 45 45 47 47 48 47 53 54 59 76 Tal 35 36 34 39 37 39 39 38 43 41 41 43 43 43 44 48 49 54 72 Euwe 32 33 32 36 32 37 37 38 41 39 41 42 43 44 44 47 52 56 75 Alekhine 31 31 29 34 30 35 33 35 38 36 37 39 38 40 40 43 47 45 69 Steinitz 20 19 17 20 16 22 19 22 25 22 22 25 24 27 27 26 30 27 33

This method can be used for any two players game as long as an "oracle" (i.e. a computer program strong enough to evaluate moves reliably) is available. This includes Checkers, Reversi, Backgammon, and probably soon Go.

The complete draft of the article is available

here

in pdf. It can also be read online

here

and there is also an

epub format

a

mobi format

and an

azw3 format for reading on various devices.

The pdf draft is almost completely identical to the final article

published in the ICGA journal, except for the page layout and some

very minor modifications. Other formats might be less readable because of the conversions of mathematical formulas, but the text is also identical to the original paper.

I want to thank again Jaap Van Den Herik, who was the main editor of

this article, and is now the honorary editor of the journal, not only

for his (numerous) corrections but especially for publishing the full

article, without any cuts or reduction, despite its length.

I also want to thank all the referees who worked on the article. They

greatly helped in enhancing the paper, with a process of

corrections/modifications that lasted for almost a year.

They have chosen to remain anonymous, but I owe them.

The original article can be consulted and ordered from

IOS Press website.

There was also a press release, an article in the CNRS journal and an article on the chessbase website about this work. In french there were also articles in various mainstream media: l'Express, 20 minutes, la Dépèche, le Figaro.

This article, as any scientific article, needs to be read, commented, criticized and verified, even if it has been published in a peer reviewed journal. The full PGN database of evaluated games can be downloaded here. Thus, anybody can download it, and check all the results presented in the paper.

The exact reference of the article is:

@Article{,

author = {Jean-Marc Alliot},

title = {Who is the master?},

journal = {ICGA Journal},

year = {2017},

volume = {39},

number = {1},

OPTpages = {},

OPTmonth = {},

note = {DOI 10.3233/ICG-160012}

}

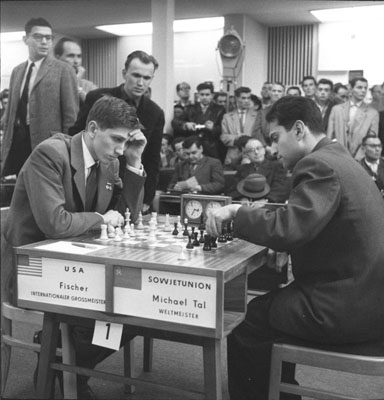

Photo by Bundesarchiv, Bild 183-76052-0335 / Kohls, Ulrich / CC-BY-SA 3.0, CC BY-SA 3.0 de, https://commons.wikimedia.org/w/index.php?curid=5665206

The download and use of documents or photographies from this site is allowed

only if their provenance is explicitly stated , and if they are only

used for non profit, educational or research activities.

All rights reserved.

Last modification: 15:45, 02/21/2024